Table of Contents

Introduction

Wazuh OpenSource Security Analytics provides a production-ready setup to analyze your IT environment. It packs with a lot of features which intently need for critical business. Wazuh was born as a fork of OSSEC HIDS with rich web applications.

Wazuh is available for most operating systems like Linux, OpenBSD, macOS, Solaris, Windows and FreeBSD. In our guide, we will take you through how to guide on CentOS Linux server.

What are the features available in Wazuh?

- Intrusion Detection

- File Integrity Monitoring

- Security Analytics

- Log Data Analysis

- Configuration Assessment

- Vulnerability Detection

- Incident Response

- Cloud Security

- Regulatory Compliance

- Container Security

Operating System and Hostname

In our setup, we are using two servers. One will be installed with Wazuh Manager and another one will be used for elastic and Kibana.

192.168.0.130 | wazuh.linuxsysadmins.local # Wazuh Manager

192.168.0.131 | elastic.linuxsysadmins.local # Elastic serverWe have a host entry in our DNS server.

[root@wazuh ~]# hostnamectl status

Static hostname: localhost.localdomain

Transient hostname: wazuh.linuxsysadmins.local

Icon name: computer-vm

Chassis: vm

Machine ID: 36618758588646fb9bd7e5ceb0e73a70

Boot ID: 39ebd50cc4fb4fdead23201f727910a9

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-1062.el7.x86_64

Architecture: x86-64

[root@wazuh ~]#To set up our Wazuh we are using CentOS 7.7

[root@wazuh ~]# cat /etc/redhat-release

CentOS Linux release 7.7.1908 (Core)

[root@wazuh ~]# Required ports for Wazuh Manager

Let’s list out all required ports for Wazuh.

514/UDP/tcp - Syslog

1514/UDP/tcp - To get events from the agent.

1515/tcp - Port Used for agent Registration.

1516/tcp - Wazuh Cluster communications.

55000/tcp - Wazuh API port for incoming requests. Let’s add the firewall using the firewall-cmd command.

# firewall-cmd --permanent --add-port={514,1514,1515,1516,55000}/tcp

# firewall-cmd --permanent --add-port={514,1514}/udp

# firewall-cmd --reload

# firewall-cmd --list-allAdd both TCP and UDP ports.

SELinux Configuration

We are not going to disable SELinux at all.

[root@wazuh ~]# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: enforcing

Mode from config file: enforcing

Policy MLS status: enabled

Policy deny_unknown status: allowed

Max kernel policy version: 31

[root@wazuh ~]#Resolving dependencies

First of all, install the required development tools using Yum.

# yum -y install gcc gcc-c++ make policycoreutils-python automake autoconf libtoolWe required more dependencies for CPython. Enable EPEL repo and install the required packages.

# yum install epel-release yum-utils -y

# yum-builddep python34 -yWe are good with the required dependencies.

Installed:

bluez-libs-devel.x86_64 0:5.44-5.el7 bzip2.x86_64 0:1.0.6-13.el7 bzip2-devel.x86_64 0:1.0.6-13.el7

expat-devel.x86_64 0:2.1.0-10.el7_3 gcc-c++.x86_64 0:4.8.5-39.el7 gdbm-devel.x86_64 0:1.10-8.el7

gmp-devel.x86_64 1:6.0.0-15.el7 libX11-devel.x86_64 0:1.6.7-2.el7 libffi-devel.x86_64 0:3.0.13-18.el7

mesa-libGL-devel.x86_64 0:18.3.4-6.el7_7 ncurses-devel.x86_64 0:5.9-14.20130511.el7_4 net-tools.x86_64 0:2.0-0.25.20131004git.el7

openssl-devel.x86_64 1:1.0.2k-19.el7 readline-devel.x86_64 0:6.2-11.el7 sqlite-devel.x86_64 0:3.7.17-8.el7_7.1

systemtap-sdt-devel.x86_64 0:4.0-10.el7_7 tcl-devel.x86_64 1:8.5.13-8.el7 tix-devel.x86_64 1:8.4.3-12.el7

tk-devel.x86_64 1:8.5.13-6.el7 valgrind-devel.x86_64 1:3.14.0-16.el7 xz-devel.x86_64 0:5.2.2-1.el7

zlib-devel.x86_64 0:1.2.7-18.el7 Download and Install Wazuh

Download the latest packages of Wazuh from the Github repo.

# curl -Ls https://github.com/wazuh/wazuh/archive/v3.11.4.tar.gz | tar zxWhile we running the curl command it will extract the tar.gz file under the current location.

[root@wazuh ~]# ls

anaconda-ks.cfg wazuh-3.11.4

[root@wazuh ~]# cd wazuh-3.11.4/

[root@wazuh wazuh-3.11.4]#

[root@wazuh wazuh-3.11.4]# ls -lthr

total 224K

drwxrwxr-x. 6 root root 84 Feb 24 17:53 wodles

-rwxrwxr-x. 1 root root 225 Feb 24 17:53 upgrade.sh

drwxrwxr-x. 4 root root 53 Feb 24 17:53 tools

drwxrwxr-x. 37 root root 4.0K Feb 24 17:53 src

drwxrwxr-x. 2 root root 54 Feb 24 17:53 integrations

-rwxrwxr-x. 1 root root 28K Feb 24 17:53 install.sh

[root@wazuh wazuh-3.11.4]# To start the installation from the source run ./install.sh.

- Use your prefered language by choosing from the list.

- Choose the kind of installation. We need a manager so type “manager”.

- If you need to configure email notifications provide your email server/Relay details.

- For remaining prompts provide “y” to use the default value.

This will take a few minutes to complete the installation.

[root@wazuh wazuh-3.11.4]# ./install.sh

** Para instalação em português, escolha [br].

** 要使用中文进行安装, 请选择 [cn].

** Für eine deutsche Installation, wählen Sie [de].

** Για εγκατάσταση στα Ελληνικά, επιλέξτε [el].

** For installation in English, choose [en].

** Para instalar en español, elija [es].

** Pour une installation en français, choisissez [fr]

** A Magyar nyelvű telepítéshez válassza [hu].

** Per l'installazione in Italiano, scegli [it].

** 日本語でインストールします.選択して下さい.[jp].

** Voor installatie in het Nederlands, kies [nl].

** Aby instalować w języku Polskim, wybierz [pl].

** Для инструкций по установке на русском ,введите [ru].

** Za instalaciju na srpskom, izaberi [sr].

** Türkçe kurulum için seçin [tr].

(en/br/cn/de/el/es/fr/hu/it/jp/nl/pl/ru/sr/tr) [en]: en

Wazuh v3.11.4 (Rev. 31121) Installation Script - http://www.wazuh.com

You are about to start the installation process of Wazuh.

You must have a C compiler pre-installed in your system.

System: Linux wazuh.linuxsysadmins.local 3.10.0-1062.el7.x86_64 (centos 7.7)

User: root

Host: wazuh.linuxsysadmins.local

-- Press ENTER to continue or Ctrl-C to abort. --

1- What kind of installation do you want (manager, agent, local, hybrid or help)? manager

Manager (server) installation chosen.

2- Setting up the installation environment.

Choose where to install Wazuh [/var/ossec]:

Installation will be made at /var/ossec .

3- Configuring Wazuh.

3.1- Do you want e-mail notification? (y/n) [n]: y

What's your e-mail address? admin@linuxsysadmins.local

What's your SMTP server ip/host? 192.168.0.135

3.2- Do you want to run the integrity check daemon? (y/n) [y]: y

Running syscheck (integrity check daemon).

3.3- Do you want to run the rootkit detection engine? (y/n) [y]: y

Running rootcheck (rootkit detection).

3.4- Do you want to run policy monitoring checks? (OpenSCAP) (y/n) [y]: y

Running OpenSCAP (policy monitoring checks).

3.5- Active response allows you to execute a specific

command based on the events received.

By default, no active responses are defined.

Default white list for the active response:

192.168.0.21

192.168.0.1

Do you want to add more IPs to the white list? (y/n)? [n]: y

IPs (space separated): 192.168.0.22

3.6- Do you want to enable remote syslog (port 514 udp)? (y/n) [y]: y

Remote syslog enabled.

3.7 - Do you want to run the Auth daemon? (y/n) [y]: y

Running Auth daemon.

3.8- Do you want to start Wazuh after the installation? (y/n) [y]: y

Wazuh will start at the end of installation.

3.9- Setting the configuration to analyze the following logs:

-- /var/log/audit/audit.log

-- /var/ossec/logs/active-responses.log

-- /var/log/messages

-- /var/log/secure

-- /var/log/maillog

If you want to monitor any other file, just change

the ossec.conf and add a new localfile entry.

Any questions about the configuration can be answered

by visiting us online at https://documentation.wazuh.com/.

--- Press ENTER to continue ---

4- Installing the system

Running the Makefile

curl -so external/cJSON.tar.gz https://packages.wazuh.com/deps/3.11/cJSON.tar.gz

cd external && gunzip cJSON.tar.gz

cd external && tar -xf cJSON.tar

rm external/cJSON.tar

curl -so external/cpython_amd64.tar.gz https://packages.wazuh.com/deps/3.11/cpython_amd64.tar.gz

cd external && gunzip cpython_amd64.tar.gz

cd external && tar -xf cpython_amd64.tar

rm external/cpython_amd64.tar

curl -so external/curl.tar.gz https://packages.wazuh.com/deps/3.11/curl.tar.gz

cd external && gunzip curl.tar.gz

cd external && tar -xf curl.tar

rm external/curl.tar

curl -so external/libdb.tar.gz https://packages.wazuh.com/deps/3.11/libdb.tar.gz

cd external && gunzip libdb.tar.gz

cd external && tar -xf libdb.tar

rm external/libdb.tar

curl -so external/libffi.tar.gz https://packages.wazuh.com/deps/3.11/libffi.tar.gz

Installed /var/ossec/framework/python/lib/python3.7/site-packages/wazuh-3.11.4-py3.7.egg

Processing dependencies for wazuh==3.11.4

Finished processing dependencies for wazuh==3.11.4

Installing SCAP security policies…

Generating self-signed certificate for ossec-authd…

Building CDB lists…

Created symlink from /etc/systemd/system/multi-user.target.wants/wazuh-manager.service to /etc/systemd/system/wazuh-manager.service.

Starting Wazuh…

Configuration finished properly.

To start Wazuh:

/var/ossec/bin/ossec-control start

To stop Wazuh:

/var/ossec/bin/ossec-control stop

The configuration can be viewed or modified at /var/ossec/etc/ossec.conf

Thanks for using Wazuh.

Please don't hesitate to contact us if you need help or find

any bugs.

Use our public Mailing List at:

https://groups.google.com/forum/#!forum/wazuh

More information can be found at:

- http://www.wazuh.com

- http://www.ossec.net

--- Press ENTER to finish (maybe more information below). ---

In order to connect agent and server, you need to add each agent to the server.

Run the 'manage_agents' to add or remove them:

/var/ossec/bin/manage_agents

More information at:

https://documentation.wazuh.com/

[root@wazuh wazuh-3.11.4]# We have truncated the long output.

Start and Enable service

Start and enable the Wazuh by running any one of the below commands.

# /var/ossec/bin/ossec-control start

or

# systemctl start wazuh-manager

# systemctl enable wazuh-manager[root@wazuh ~]# systemctl status wazuh-manager

● wazuh-manager.service - Wazuh manager

Loaded: loaded (/etc/systemd/system/wazuh-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2020-03-13 12:28:21 +04; 32min ago

Process: 20862 ExecStart=/usr/bin/env ${DIRECTORY}/bin/ossec-control start (code=exited, status=0/SUCCESS)

CGroup: /system.slice/wazuh-manager.service

├─20927 /var/ossec/bin/ossec-authd

├─20936 /var/ossec/bin/wazuh-db

├─20953 /var/ossec/bin/ossec-execd

├─20961 /var/ossec/bin/ossec-analysisd

├─20967 /var/ossec/bin/ossec-syscheckd

├─20976 /var/ossec/bin/ossec-remoted

├─20981 /var/ossec/bin/ossec-logcollector

├─20987 /var/ossec/bin/ossec-monitord

└─20999 /var/ossec/bin/wazuh-modulesd

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started wazuh-db…

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started ossec-execd…

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started ossec-analysisd…

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started ossec-syscheckd…

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started ossec-remoted…

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started ossec-logcollector…

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started ossec-monitord…

Mar 13 12:28:19 wazuh.linuxsysadmins.local env[20862]: Started wazuh-modulesd…

Mar 13 12:28:21 wazuh.linuxsysadmins.local env[20862]: Completed.

Mar 13 12:28:21 wazuh.linuxsysadmins.local systemd[1]: Started Wazuh manager.

[root@wazuh ~]#Let’s follow with installing API for Wazuh.

Installing Wazuh API

Before starting with installing API, we need to resolve the dependencies.

# curl --silent --location https://rpm.nodesource.com/setup_10.x | bash -Installing the NodeSource Node.js 10.x repo…

Inspecting system…

rpm -q --whatprovides redhat-release || rpm -q --whatprovides centos-release || rpm -q --whatprovides cloudlinux-release || rpm -q --whatprovides sl-release

uname -m

Confirming "el7-x86_64" is supported…

curl -sLf -o /dev/null 'https://rpm.nodesource.com/pub_10.x/el/7/x86_64/nodesource-release-el7-1.noarch.rpm'

Downloading release setup RPM…

mktemp

curl -sL -o '/tmp/tmp.UukGHctGkt' 'https://rpm.nodesource.com/pub_10.x/el/7/x86_64/nodesource-release-el7-1.noarch.rpm'

Installing release setup RPM…

rpm -i --nosignature --force '/tmp/tmp.UukGHctGkt'

Cleaning up…

rm -f '/tmp/tmp.UukGHctGkt'

Checking for existing installations…

rpm -qa 'node|npm' | grep -v nodesource

Run sudo yum install -y nodejs to install Node.js 10.x and npm.

You may also need development tools to build native addons:

sudo yum install gcc-c++ make

To install the Yarn package manager, run:

curl -sL https://dl.yarnpkg.com/rpm/yarn.repo | sudo tee /etc/yum.repos.d/yarn.repo sudo yum install yarn

[root@wazuh ~]# Wazhu API required NodeJS greater than the 4.6.1 version.

# sudo yum install -y nodejs

# npm config set user 0Installing : 2:nodejs-10.19.0-1nodesource.x86_64 1/1

Verifying : 2:nodejs-10.19.0-1nodesource.x86_64 1/1

Installed:

nodejs.x86_64 2:10.19.0-1nodesource

Complete!

[root@wazuh ~]#By following install the yarn as stated from the above output. Yarn is a package manager for codes.

# curl -sL https://dl.yarnpkg.com/rpm/yarn.repo | sudo tee /etc/yum.repos.d/yarn.repo# yum install yarn -yDownload and start the installation of Wazuh API.

# curl -s -o install_api.sh https://raw.githubusercontent.com/wazuh/wazuh-api/v3.11.4/install_api.sh && bash ./install_api.sh downloadOutput for reference

[root@wazuh ~]# curl -s -o install_api.sh https://raw.githubusercontent.com/wazuh/wazuh-api/v3.11.4/install_api.sh && bash ./install_api.sh download

Wazuh API

Downloading API from https://github.com/wazuh/wazuh-api/archive/v3.11.4.tar.gz

Installing Wazuh API 3.11.4

Installing API ['/var/ossec/api'].

Installing NodeJS modules.

Installing service.

Installing for systemd

Created symlink from /etc/systemd/system/multi-user.target.wants/wazuh-api.service to /etc/systemd/system/wazuh-api.service.

API URL: http://host_ip:55000/

user: 'foo'

password: 'bar'

Configuration: /var/ossec/api/configuration

Test: curl -u foo:bar -k http://127.0.0.1:55000?pretty

Note: You can configure the API executing /var/ossec/api/scripts/configure_api.sh

[API installed successfully]

[root@wazuh ~]#Check the wazuh-api service by running systemctl command

# systemctl status wazuh-api[root@wazuh ~]# systemctl status wazuh-api

● wazuh-api.service - Wazuh API daemon

Loaded: loaded (/etc/systemd/system/wazuh-api.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2020-03-13 13:57:00 +04; 1min 20s ago

Docs: https://documentation.wazuh.com/current/user-manual/api/index.html

Main PID: 22619 (node)

CGroup: /system.slice/wazuh-api.service

└─22619 /usr/bin/node /var/ossec/api/app.js

Mar 13 13:57:00 wazuh.linuxsysadmins.local systemd[1]: Started Wazuh API daemon.

[root@wazuh ~]#Securing Wazuh API

The installation provides only with HTTP and defaults user “foo” and password as “bar”. Let’s configure to secure the API for secure communication by configuring https.

# /var/ossec/api/scripts/configure_api.sh[root@wazuh ~]# /var/ossec/api/scripts/configure_api.sh

Wazuh API Configuration

TCP port [55000]:

Using TCP port 55000.

Enable HTTPS and generate SSL certificate? [Y/n/s]: Y

Step 1: Create key [Press Enter]

Generating RSA private key, 2048 bit long modulus

……………………………………………+++

…………………………………………………………………………………..+++

e is 65537 (0x10001)

Enter pass phrase for server.key:

Verifying - Enter pass phrase for server.key:

Enter pass phrase for server.key.org:

writing RSA key

Step 2: Create self-signed certificate [Press Enter]

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

Country Name (2 letter code) [XX]:IN

State or Province Name (full name) []:TN

Locality Name (eg, city) [Default City]:Chennai

Organization Name (eg, company) [Default Company Ltd]:Self

Organizational Unit Name (eg, section) []:Nix

Common Name (eg, your name or your server's hostname) []:linuxsysadmins.local

Email Address []:admin@linuxsysadmins.local

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []:

Key: /var/ossec/api/configuration/ssl/server.key.

Certificate: /var/ossec/api/configuration/ssl/server.crt

Continue with next section [Press Enter]

Enable user authentication? [Y/n/s]: Y

API user: babinlonston

New password:

Re-type new password:

Adding password for user babinlonston.

is the API running behind a proxy server? [y/N/s]: N

API not running behind proxy server.

Configuration changed.

Restarting API.

[Configuration changed]

[root@wazuh ~]#Once the script completes it will restart the API, In case, if you need to restart the service use

# systemctl restart wazuh-apiThe changed account information will be under

[root@wazuh ~]# cat /var/ossec/api/configuration/auth/user

babinlonston:$2a$10$3Ov.Glfvr2p81u3j3anmu.fw6DXZJDq0ZEURCCr1WXxt3EEBdtHs.

[root@wazuh ~]# Testing secure API communication

Once configured with HTTPS and default user account, try to test the configuration.

[root@wazuh ~]# curl -u babinlonston:xxxxxxxx -k https://127.0.0.1:55000?pretty -v

* About to connect() to 127.0.0.1 port 55000 (#0)

* Trying 127.0.0.1…

* Connected to 127.0.0.1 (127.0.0.1) port 55000 (#0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* skipping SSL peer certificate verification

* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

* Server certificate:

* subject: E=admin@linuxsysadmins.local,CN=linuxsysadmins.local,OU=Nix,O=Self,L=Chennai,ST=TN,C=IN

* start date: Mar 13 10:18:08 2020 GMT

* expire date: Oct 21 10:18:08 2025 GMT

* common name: linuxsysadmins.local

* issuer: E=admin@linuxsysadmins.local,CN=linuxsysadmins.local,OU=Nix,O=Self,L=Chennai,ST=TN,C=IN

* Server auth using Basic with user 'babinlonston'

> GET /?pretty HTTP/1.1

> Authorization: Basic YmFiaW5sb25zdG9uOlJlZGhhdEAxMjM=

> User-Agent: curl/7.29.0

> Host: 127.0.0.1:55000

> Accept: /

< HTTP/1.1 200 OK

< X-Powered-By: Express

< Access-Control-Allow-Origin: *

< Content-Type: text/html; charset=utf-8

< Content-Length: 235

< ETag: W/"eb-TPfP9bhXx8EStmyRVAbjKXdy40c"

< Date: Fri, 13 Mar 2020 10:23:04 GMT

< Connection: keep-alive

<

{

"error": 0,

"data": {

"msg": "Welcome to Wazuh HIDS API",

"api_version": "v3.11.4",

"hostname": "wazuh.linuxsysadmins.local",

"timestamp": "Fri Mar 13 2020 14:23:04 GMT+0400 (Gulf Standard Time)"

}

}

Connection #0 to host 127.0.0.1 left intact

[root@wazuh ~]# Installing Filebeat

To securely forward the alerts from filebeat to the elastic server we are about to use the Filebeat.

Let’s import the GPG key and add the repository.

# rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch# cat > /etc/yum.repos.d/elastic.repo << EOF

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOFInstall the filebeat with the required version.

# yum install filebeat-7.3.0 -yDownload the Filebeat configuration file from the Wazuh repository and save it under the /etc/filebeat/, By following add the permissions.

# curl -so /etc/filebeat/filebeat.yml https://raw.githubusercontent.com/wazuh/wazuh/v3.11.4/extensions/filebeat/7.x/filebeat.yml

# chmod go+r /etc/filebeat/filebeat.ymlDownload the alerts template for Elasticsearch

# curl -so /etc/filebeat/wazuh-template.json https://raw.githubusercontent.com/wazuh/wazuh/v3.11.4/extensions/elasticsearch/7.x/wazuh-template.json

# chmod go+r /etc/filebeat/wazuh-template.jsonDownload the wazuh module for filebeat. Extract and save under /usr/share/filebeat/module

# curl -s https://packages.wazuh.com/3.x/filebeat/wazuh-filebeat-0.1.tar.gz | sudo tar -xvz -C /usr/share/filebeat/moduleConfigure filebeat

Once the above steps are resolved, Let’s start to configure the filebeat. As we mentioned before, the IP of the Elastic server is 192.168.0.131 | elastic.linuxsysadmins.local

# vim /etc/filebeat/filebeat.ymlThe only change we need to do in this file is just replaced http://ELASTIC_SERVER_IP:9200 with http://192.168.0.131:9200

output.elasticsearch.hosts: ['http://ELASTIC_SERVER_IP:9200']

to

output.elasticsearch.hosts: ['http://192.168.0.131:9200']Enable and restart the filebeat service.

# systemctl daemon-reload

# systemctl enable filebeat.service

# systemctl start filebeat.serviceNext, we need to set up the Elastic Search server, Before that, let us prepare to set up the Java. In my setup, I’m using with Java 13.0.2.

To set up the Java follow this guide

[root@elastic ~]# java -version

java version "13.0.2" 2020-01-14

Java(TM) SE Runtime Environment (build 13.0.2+8)

Java HotSpot(TM) 64-Bit Server VM (build 13.0.2+8, mixed mode, sharing)

[root@elastic ~]#Setting up Elastic Server

Before starting with any configuration we need to make sure the hostname is set.

[root@elastic ~]# hostnamectl

Static hostname: localhost.localdomain

Transient hostname: elastic.linuxsysadmins.local

Icon name: computer-vm

Chassis: vm

Machine ID: 36618758588646fb9bd7e5ceb0e73a70

Boot ID: e737fa66fb1148bcaadb142ca2dc39d3

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-1062.el7.x86_64

Architecture: x86-64

[root@elastic ~]#Firewalls for Elastic Server

The required port in the Elastic server is as follows.

# firewall-cmd --add-port=9200/tcp --permanent

# firewall-cmd --add-port=5601/tcp --permanent

# firewall-cmd --reload

# firewall-cmd --list-allRight after that, Import the GPG key for the new repository and create the repo file.

# rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch# cat > /etc/yum.repos.d/elastic.repo << EOF

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOFInstalling Elastic

Once after adding the repo install Elastic Search. The package size will be around 475 MB.

# yum install elasticsearch-7.6.1 -yOutput for reference

Installing : elasticsearch-7.6.1-1.x86_64 1/1

NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

Created elasticsearch keystore in /etc/elasticsearch

Verifying : elasticsearch-7.6.1-1.x86_64 1/1

Installed:

elasticsearch.x86_64 0:7.6.1-1

Complete!

[root@elastic ~]#By default, Elastic search will listen on loopback (127.0.0.1) localhost interface.

# vim /etc/elasticsearch/elasticsearch.ymlnetwork.host: 192.168.0.131

node.name: elastic

cluster.initial_master_nodes: ["elastic"]Start and enable the Elasticsearch service

# systemctl daemon-reload

# systemctl enable elasticsearch.service

# systemctl start elasticsearch.service[root@elastic ~]# systemctl status elasticsearch.service

● elasticsearch.service - Elasticsearch

Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; enabled; vendor preset: disabled)

Active: active (running) since Sat 2020-03-14 12:13:23 +04; 2min 13s ago

Docs: http://www.elastic.co

Main PID: 1342 (java)

CGroup: /system.slice/elasticsearch.service

├─1342 /usr/java/jdk-13.0.2/bin/java -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Df…

└─1934 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

Mar 14 12:13:12 elastic.linuxsysadmins.local systemd[1]: Starting Elasticsearch…

Mar 14 12:13:12 elastic.linuxsysadmins.local elasticsearch[1342]: Java HotSpot(TM) 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and … release.

Mar 14 12:13:23 elastic.linuxsysadmins.local systemd[1]: Started Elasticsearch.

Hint: Some lines were ellipsized, use -l to show in full.

[root@elastic ~]#Let’s verify the cluster health, It should show the status as “green”.

[root@elastic ~]# curl 192.168.0.131:9200/_cat/health?v

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent

1584126047 19:00:47 elasticsearch green 1 1 6 6 0 0 0 0 - 100.0%

[root@elastic ~]#Few Tweaks for ElasticSearch

Configure Java path and Heap memory for elastic search.

# vim /etc/sysconfig/elasticsearchJAVA_HOME=/usr/java/jdk-13.0.2

ES_HEAP_SIZE=4g

MAX_OPEN_FILES=65535Adding Filebeat Template

Once the Elasticsearch server is up and running switch back to wazuh server where filebeat is installed and run the below command to add the filebeat template.

# filebeat setup --index-management -E setup.template.json.enabled=false

[root@wazuh ~]# filebeat setup --index-management -E setup.template.json.enabled=false

ILM policy and write alias loading not enabled.

Index setup finished.

[root@wazuh ~]#Once we run the above command we should get the output on the elastic search server log /var/log/elasticsearch/elasticsearch.log as shown below.

[2020-03-13T16:41:19,832][INFO ][o.e.c.m.MetaDataIndexTemplateService] [elastic] adding template [filebeat-7.6.1] for index patterns [filebeat-7.6.1-*]Let’s verify the same by running curl from filebeat server.

[root@wazuh ~]# curl http://192.168.0.131:9200

{

"name" : "elastic",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "hivhkmBPRXq5ihLx0KLRgg",

"version" : {

"number" : "7.6.1",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "aa751e09be0a5072e8570670309b1f12348f023b",

"build_date" : "2020-02-29T00:15:25.529771Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

[root@wazuh ~]#Look’s good as of now. Let’s move forward.

Setting up Kibana

To visualize the events and archived store in elastic search let us use Kibana. Install with the latest version of Kibana by running

# yum install kibana -yRight after, install the wazuh plugin for kibana by downloading the zip file.

# cd /tmp/

# wget https://packages.wazuh.com/wazuhapp/wazuhapp-3.11.4_7.6.1.zip

# cd /usr/share/kibana/

# sudo -u kibana bin/kibana-plugin install file:///tmp/wazuhapp-3.11.4_7.6.1.zip[root@elastic kibana]# sudo -u kibana bin/kibana-plugin install file:///tmp/wazuhapp-3.11.4_7.6.1.zip

Attempting to transfer from file:///tmp/wazuhapp-3.11.4_7.6.1.zip

Transferring 25031565 bytes………………..

Transfer complete

Retrieving metadata from plugin archive

Extracting plugin archive

Extraction complete

Plugin installation complete

[root@elastic kibana]#Make changes to kibana configuration to listen from anywhere outside of the box.

# vim /etc/kibana/kibana.ymlMoreover, we need to set the IP of the elastic search server by editing the same config file.

server.port: 5601

server.host: "192.168.0.131"

server.name: "elastic"

elasticsearch.hosts: ["http://192.168.0.131:9200"]

pid.file: /var/run/kibana.pid

logging.dest: /var/log/kibana/kibana.log

logging.verbose: falseCreate the PID file and log file with respective ownership and permissions.

# touch /var/run/kibana.pid

# chown kibana:kibana /var/run/kibana.pid

# mkdir /var/log/kibana/

# touch /var/log/kibana/kibana.log

# chown -R kibana:kibana /var/log/kibana/*Increase the kibana Heap Memory

# vim /etc/default/kibana

NODE_OPTIONS="--max-old-space-size=4096"Add port 5601 as HTTP service in SELinux.

[root@elastic ~]# semanage port -a -t http_port_t -p tcp 5601Finally, Enable and restart the kiabana service.

# systemctl daemon-reload

# systemctl enable kibana.service

# systemctl start kibana.service[root@elastic ~]# systemctl status kibana.service

● kibana.service - Kibana

Loaded: loaded (/etc/systemd/system/kibana.service; enabled; vendor preset: disabled)

Active: active (running) since Sat 2020-03-14 12:13:10 +04; 2min 24s ago

Main PID: 970 (node)

CGroup: /system.slice/kibana.service

└─970 /usr/share/kibana/bin/../node/bin/node /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.yml

Mar 14 12:13:10 localhost.localdomain systemd[1]: Started Kibana.Configure the wazuh plugin with credentials and make sure to use HTTPS.

# vim /usr/share/kibana/plugins/wazuh/wazuh.ymlhosts:

default:

url: https://192.168.0.130

port: 55000

user: babinlonston

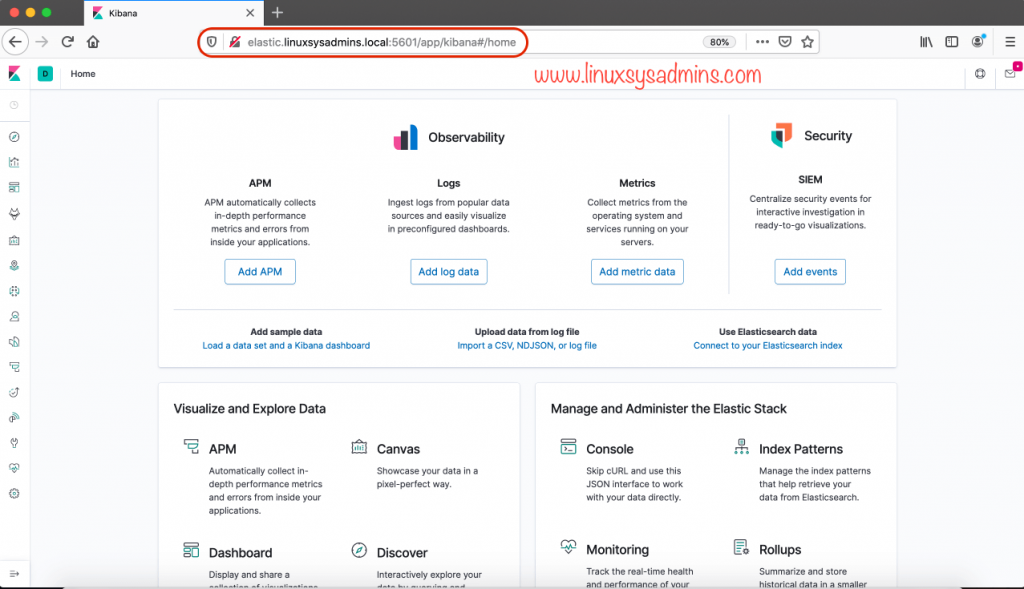

password: xxxxxxxxx Access Kibana Graphical Dashboard

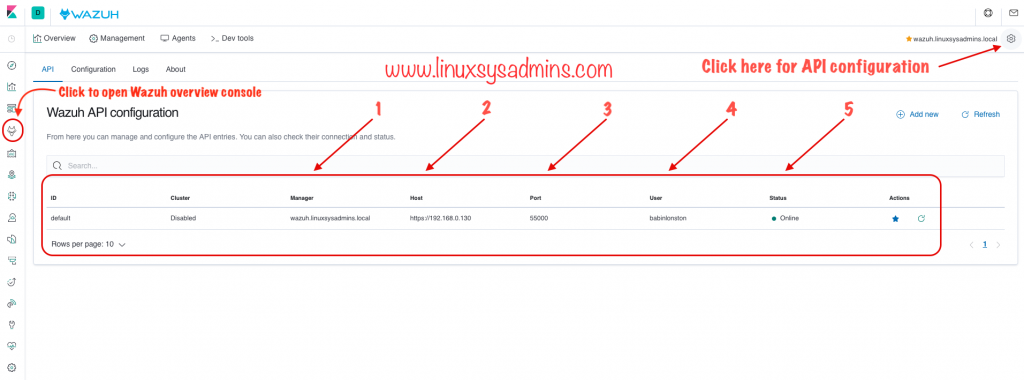

Navigate to http://elastic.linuxsysadmins.local:5601 to access the Kibana dashboard.

The status of the API can be checked from the Kibana graphical interface.

Click on the left side Wazuh icon, by following on the right-hand side click on the gear icon to get the API configuration window.

- Wazuh Manager server.

- The IP address of the Wazuh server.

- Port of the API.

- User account used for API communication.

- Status of the API communication.

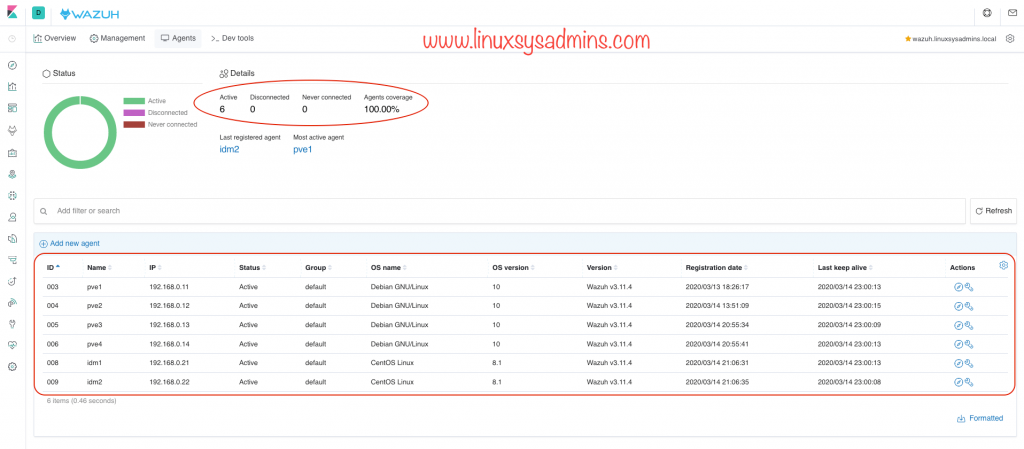

Now, Let me add my all physical servers as an agent.

Agent Installation for Debian Based servers

Install the Agent

Few of my physical servers are need to be monitored, let’s add all of those Debian-based physical servers to Wazuh Manager. To start with the agent setup will begin with resolving the required dependencies.

# apt-get install curl apt-transport-https lsb-release gnupg2

root@pve1:~# apt-get install curl apt-transport-https lsb-release gnupg2

Reading package lists… Done

Building dependency tree

Reading state information… Done

apt-transport-https is already the newest version (1.8.2).

gnupg2 is already the newest version (2.2.12-1+deb10u1).

lsb-release is already the newest version (10.2019051400).

curl is already the newest version (7.64.0-4+deb10u1).

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

root@pve1:~#Add the GPG key for the Wazuh agent, By following add the repository

# curl -s https://packages.wazuh.com/key/GPG-KEY-WAZUH | apt-key add -

# echo "deb https://packages.wazuh.com/3.x/apt/ stable main" | tee /etc/apt/sources.list.d/wazuh.list

# apt-get updateStart the agent installation by running

# apt-get install wazuh-agentroot@pve1:~# apt-get install wazuh-agent

Reading package lists… Done

Building dependency tree

Reading state information… Done

wazuh-agent is already the newest version (3.11.4-1).

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

root@pve1:~#Adding client

To add the client we need to be in Wazhu Manager. While running the mange_agents command, make sure to use -a option with the client IP address or hostname and use -n for what name to be listed in Wazhu Manager.

# /var/ossec/bin/manage_agents -a 192.168.0.11 -n pve1[root@wazuh ~]# /var/ossec/bin/manage_agents -a 192.168.0.11 -n pve1

Wazuh v3.11.4 Agent manager. *

The following options are available: *

(A)dd an agent (A).

(E)xtract key for an agent (E).

(L)ist already added agents (L).

(R)emove an agent (R).

(Q)uit.

Choose your action: A,E,L,R or Q:

Adding a new agent (use '\q' to return to the main menu).

Please provide the following:

A name for the new agent: * The IP Address of the new agent: Confirm adding it?(y/n):

Agent added with ID 003.

manage_agents: Exiting.

[root@wazuh ~]#List and verify all the added agents

[root@wazuh ~]# /var/ossec/bin/manage_agents -l

Available agents:

ID: 003, Name: pve1, IP: 192.168.0.11

[root@wazuh ~]#Now, we need to extract the key for an agent using its ID number.

[root@wazuh ~]# /var/ossec/bin/manage_agents -e 003

Agent key information for '003' is:

MDAzIHB2ZTEgMTkyLjE2OC4wLjExIGFhYjBhMGRhYmY4OWFhZjBhMzI5MTk4ZTFkMzg4ODUxODRjOGM0YjQ0NDQ5YTU5N2EyYWYyYTAzNTNhMWExZTY=

[root@wazuh ~]#Once the key is ready, switch to the client-side and run the command with -i the option to import the authentication key.

root@pve1:~# /var/ossec/bin/manage_agents -i MDAzIHB2ZTEgMTkyLjE2OC4wLjExIGFhYjBhMGRhYmY4OWFhZjBhMzI5MTk4ZTFkMzg4ODUxODRjOGM0YjQ0NDQ5YTU5N2EyYWYyYTAzNTNhMWExZTY=

Agent information:

ID:003

Name:pve1

IP Address:192.168.0.11

Confirm adding it?(y/n): y

Added.

root@pve1:~#That’s it, let’s restart the agent.

# systemctl restart wazuh-agentWe have added with few agents and they are active.

That’s it, we have completed with Wazhu client-side setup.

Troubleshooting and fixes.

If Elastic search or Kibana failed to start, troubleshoot with the below commands.

# journalctl _SYSTEMD_UNIT=kibana.service

# journalctl -f _SYSTEMD_UNIT=kibana.serviceSolution for JavaScript heap Out of memory for Kibana

If we miss with increasing the heap memory for Java we may encounter with below errors.

FATAL ERROR: Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory

FATAL ERROR: CALL_AND_RETRY_LAST Allocation failed - JavaScript heap out of memoryReference URL: https://groups.google.com/forum/#!topic/wazuh/Ji7-l3Y8JK8

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: <--- JS stacktrace --->

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: ==== JS stack trace =========================================

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 0: ExitFrame [pc: 0x1b22d6a5be1d]

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: Security context: 0x20f68519e6e9

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 1: add [0x20f685191831](this=0x22d475c05fc9 ,0x11a62cea3299 )

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 2: prepend_comments [0xd64b2eba221] [/usr/share/kibana/node_modules/terser/dist/bundle.min.js:1] [bytecode=0x142c02cb7e9

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 3: /* anonym…

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: FATAL ERROR: CALL_AND_RETRY_LAST Allocation failed - JavaScript heap out of memory

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 1: 0x8fa090 node::Abort() [/usr/share/kibana/bin/../node/bin/node]

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 2: 0x8fa0dc [/usr/share/kibana/bin/../node/bin/node]

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 3: 0xb0052e v8::Utils::ReportOOMFailure(v8::internal::Isolate, char const, bool) [/usr/share/kibana/bin/../node/bin/nod

Mar 13 17:35:45 elastic.linuxsysadmins.local kibana[11128]: 4: 0xb00764 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate, char const, bool) [/usr/share/kibana/bin/.Solution for Warning to connect the Wazhu API

If your Kibana Dashboard shows a Warning to connect with Wazhu API or with the below error.

3099 - ERROR3099 - Some Wazuh daemons are not ready in node 'node01' (wazuh-db->failed)Switch to Wazhu Manager server and restart the services.

# systemctl restart wazuh-api

# systemctl restart wazuh-managerFew more troubleshooting we have gone through are as follows.

[root@elastic ~]# curl -X GET "192.168.0.131:9200/_cat/indices/.kib*?v&s=index"

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana_1 bfgsdC23TWCcdiTCqZsOHg 1 0 0 0 230b 230b

green open .kibana_task_manager_1 47p0T3PjTaKRzmhOv1IWOg 1 0 0 0 283b 283b

[root@elastic ~]#[root@elastic ~]# curl -XDELETE http://192.168.0.131:9200/.kibana_1

{"acknowledged":true}

[root@elastic ~]# We will keep updating these troubleshooting steps while we face similar issues in the future.

Conclusion

The Wazuh OpenSource security tool provides production-ready software for analyzing our logs. Will come up with more articles related to Wazuh. Subscribe to our newsletter and stay with us.

Falta instalar Filebeat: yum install filebeat

Thanks for reporting, It’s Updated.