Table of Contents

Introduction

In our earlier guide, we have seen how to recover from a deleted logical volume. In this guide, will walk through how to recover from a deleted physical volume or from a failed disk scenario.

The flexibility of recovering filesystem in logical volume management made life easier. If you are new to Linux domain and looking for some troubleshooting guide keep reading. LVM topic is a quite big chapter, will try to cover most of the production required topics in our guide.

If you have missed our previous published guide related to LVM have a look into this.

Current setup

These are the current PV, VG, and LV currently used in our lab setup.

Here we could see three (/dev/sdb, /dev/sdc and /dev/sdd) numbers of physical disks used to create the vg_data volume group.

[root@prod-srv-01 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 rhel lvm2 a-- 98.41g 0

/dev/sdb vg_data lvm2 a-- <20.00g 0

/dev/sdc vg_data lvm2 a-- <20.00g <9.99g

/dev/sdd vg_data lvm2 a-- <20.00g 0

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

rhel 1 3 0 wz--n- 98.41g 0

vg_data 3 2 0 wz--n- <59.99g <9.99g

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home rhel -wi-ao---- 30.98g

root rhel -wi-ao---- 63.46g

swap rhel -wi-ao---- 3.96g

lv_data vg_data -wi-ao---- 25.00g

lv_u01 vg_data -wi-ao---- 25.00g

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]# lvs -a -o +devices

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert Devices

home rhel -wi-ao---- 30.98g /dev/sda3(1015)

root rhel -wi-ao---- 63.46g /dev/sda3(8947)

swap rhel -wi-ao---- 3.96g /dev/sda3(0)

lv_data vg_data -wi-ao---- 25.00g /dev/sdb(0)

lv_data vg_data -wi-ao---- 25.00g /dev/sdc(0)

lv_u01 vg_data -wi-ao---- 25.00g /dev/sdd(0)

lv_u01 vg_data -wi-ao---- 25.00g /dev/sdc(1281)

[root@prod-srv-01 ~]# The file system we are using under the vg_data volume group are /u01 and /data

[root@prod-srv-01 ~]# df -hP

Filesystem Size Used Avail Use% Mounted on

devtmpfs 889M 0 889M 0% /dev

tmpfs 909M 0 909M 0% /dev/shm

tmpfs 909M 8.7M 900M 1% /run

tmpfs 909M 0 909M 0% /sys/fs/cgroup

/dev/mapper/rhel-root 64G 1.9G 62G 3% /

/dev/mapper/vg_data-lv_data 25G 24G 1.2G 96% /data

/dev/mapper/rhel-home 31G 254M 31G 1% /home

/dev/sda2 1014M 249M 766M 25% /boot

/dev/sda1 599M 6.9M 592M 2% /boot/efi

tmpfs 182M 0 182M 0% /run/user/0

/dev/mapper/vg_data-lv_u01 25G 6.4G 19G 26% /u01

[root@prod-srv-01 ~]#Failed Disk or Deleted Physical Volume

Two scenarios are accidentally deleted physical volume or failed disk.

If you have removed any one of physical volume by running pvremove command or assume one of our physical disks got failed.

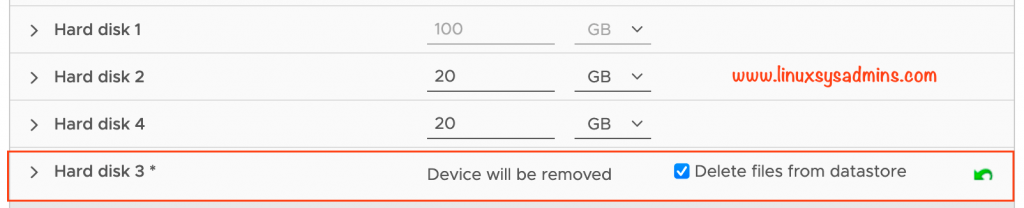

To create this guide, I have deleted the 3rd disk from the VMware side.

After the disk fails, took a reboot, and the server does not come up because two logical volumes are not able to mount the filesystem. To bring up the server, I have managed to comment on the entry in FSTAB and booted the server successfully.

Adding new Disk

Now the failed disk has been replaced with a new disk, This is the output from dmesg after adding a new disk.

[ 352.323990] sd 0:0:2:0: Attached scsi generic sg4 type 0

[ 352.324566] sd 0:0:2:0: [sdd] 41943040 512-byte logical blocks: (21.5 GB/20.0 GiB)

[ 352.324589] sd 0:0:2:0: [sdd] Write Protect is off

[ 352.324591] sd 0:0:2:0: [sdd] Mode Sense: 61 00 00 00

[ 352.325310] sd 0:0:2:0: [sdd] Cache data unavailable

[ 352.325311] sd 0:0:2:0: [sdd] Assuming drive cache: write through

[ 352.334528] sd 0:0:2:0: [sdd] Attached SCSI diskWe are able to see the newly added disk as /dev/sdd.

[root@prod-srv-01 ~]# lsblk -S

NAME HCTL TYPE VENDOR MODEL REV TRAN

sda 0:0:0:0 disk VMware Virtual disk 2.0

sdb 0:0:1:0 disk VMware Virtual disk 2.0

sdc 0:0:3:0 disk VMware Virtual disk 2.0

sdd 0:0:2:0 disk VMware Virtual disk 2.0

sr0 3:0:0:0 rom NECVMWar VMware SATA CD00 1.00 sata

[root@prod-srv-01 ~]#Recovering Metadata of Deleted Physical Volume

Now, Let’s start with recovering metadata for our deleted Physical volumes.

While listing PVS, VGS, or LVS it will warn that one of the devices with xxxxx UUID is missing.

[root@prod-srv-01 ~]# pvs

WARNING: Couldn't find device with uuid 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5.

WARNING: VG vg_data is missing PV 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5 (last written to /dev/sdc).

PV VG Fmt Attr PSize PFree

/dev/sda3 rhel lvm2 a-- 98.41g 0

/dev/sdb vg_data lvm2 a-- <20.00g 0

/dev/sdc vg_data lvm2 a-- <20.00g 0

[unknown] vg_data lvm2 a-m <20.00g <9.99g

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]# vgs

WARNING: Couldn't find device with uuid 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5.

WARNING: VG vg_data is missing PV 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5 (last written to /dev/sdc).

VG #PV #LV #SN Attr VSize VFree

rhel 1 3 0 wz--n- 98.41g 0

vg_data 3 2 0 wz-pn- <59.99g <9.99g

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]# lvs

WARNING: Couldn't find device with uuid 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5.

WARNING: VG vg_data is missing PV 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5 (last written to /dev/sdc).

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home rhel -wi-ao---- 30.98g

root rhel -wi-ao---- 63.46g

swap rhel -wi-ao---- 3.96g

lv_data vg_data -wi-----p- 25.00g

lv_u01 vg_data -wi-----p- 25.00g

[root@prod-srv-01 ~]# Just copy the UUID and look into the archive and backup using grep. Before taking the reboot the reported UUID is referring to /dev/sdc device.

[root@prod-srv-01 ~]# cat /etc/lvm/archive/vg_data_00004-687002922.vg | grep -B 2 -A 9 "57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5"

pv1 {

id = "57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5"

device = "/dev/sdc" # Hint only

status = ["ALLOCATABLE"]

flags = []

dev_size = 41943040 # 20 Gigabytes

pe_start = 2048

pe_count = 5119 # 19.9961 Gigabytes

}

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]# cat /etc/lvm/backup/vg_data | grep -B 2 -A 9 "57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5"

pv1 {

id = "57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5"

device = "/dev/sdc" # Hint only

status = ["ALLOCATABLE"]

flags = []

dev_size = 41943040 # 20 Gigabytes

pe_start = 2048

pe_count = 5119 # 19.9961 Gigabytes

}

[root@prod-srv-01 ~]# Let’s do a dry run before creating the deleted Physical Volume using the UUID by pointing to available archive files with the newly added disk.

[root@prod-srv-01 ~]# pvcreate --test --uuid "57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5" --restorefile /etc/lvm/archive/vg_data_00004-687002922.vg /dev/sdd

TEST MODE: Metadata will NOT be updated and volumes will not be (de)activated.

WARNING: Couldn't find device with uuid NFe2g1-YGoI-SEla-dqxx-UYSU-czmv-aE93o1.

WARNING: Couldn't find device with uuid 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5.

WARNING: Couldn't find device with uuid SZm70U-OGDT-mdcd-AA0T-vLal-oZzF-C23o96.

WARNING: Couldn't find device with uuid 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5.

WARNING: VG vg_data is missing PV 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5 (last written to /dev/sdc).

Physical volume "/dev/sdd" successfully created.

[root@prod-srv-01 ~]# --test – To do a dry run--uuid – The UUID suppose to use for newly created Physical Volume--restorefile – To read the archive file produced by vgcfgbackup

To create the new PV remove the --test and run the same command once again.

# pvcreate --uuid "57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5" --restorefile /etc/lvm/archive/vg_data_00004-687002922.vg /dev/sddNew PV is ready.

[root@prod-srv-01 ~]# pvcreate --uuid "57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5" --restorefile /etc/lvm/archive/vg_data_00004-687002922.vg /dev/sdd

WARNING: Couldn't find device with uuid NFe2g1-YGoI-SEla-dqxx-UYSU-czmv-aE93o1.

WARNING: Couldn't find device with uuid 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5.

WARNING: Couldn't find device with uuid SZm70U-OGDT-mdcd-AA0T-vLal-oZzF-C23o96.

WARNING: Couldn't find device with uuid 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5.

WARNING: VG vg_data is missing PV 57aqQz-eQud-0PNI-huvi-2flz-GgWR-AjdBW5 (last written to /dev/sdc).

Physical volume "/dev/sdd" successfully created.

[root@prod-srv-01 ~]# Verify the Created Physical Volume

Use pvs command to verify the same, Still, we will get the warning because VG needs to be restored.

[root@prod-srv-01 ~]# pvs

WARNING: PV /dev/sdd in VG vg_data is missing the used flag in PV header.

PV VG Fmt Attr PSize PFree

/dev/sda3 rhel lvm2 a-- 98.41g 0

/dev/sdb vg_data lvm2 a-- <20.00g 0

/dev/sdc vg_data lvm2 a-- <20.00g 0

/dev/sdd vg_data lvm2 a-- <20.00g <9.99g

[root@prod-srv-01 ~]#Restoring Volume Group

Then restore the Volume group with available backup.

[root@prod-srv-01 ~]# vgcfgrestore --test -f /etc/lvm/backup/vg_data vg_data

TEST MODE: Metadata will NOT be updated and volumes will not be (de)activated.

Restored volume group vg_data.

[root@prod-srv-01 ~]# As Usual, done a dry run before restoring from the backup.

[root@prod-srv-01 ~]# vgcfgrestore -f /etc/lvm/backup/vg_data vg_data

Restored volume group vg_data.

[root@prod-srv-01 ~]#Verify the status of the logical volume using lvscan. It will be in inactive state.

[root@prod-srv-01 ~]# lvscan

inactive '/dev/vg_data/lv_data' [25.00 GiB] inherit

inactive '/dev/vg_data/lv_u01' [25.00 GiB] inherit

ACTIVE '/dev/rhel/swap' [3.96 GiB] inherit

ACTIVE '/dev/rhel/home' [30.98 GiB] inherit

ACTIVE '/dev/rhel/root' [63.46 GiB] inherit

[root@prod-srv-01 ~]# Activate the volume group, it will bring back the logical volume inactive state.

[root@prod-srv-01 ~]# vgchange -ay vg_data

2 logical volume(s) in volume group "vg_data" now active

[root@prod-srv-01 ~]#Once again run lvscan to verify the status.

[root@prod-srv-01 ~]# lvscan

ACTIVE '/dev/vg_data/lv_data' [25.00 GiB] inherit

ACTIVE '/dev/vg_data/lv_u01' [25.00 GiB] inherit

ACTIVE '/dev/rhel/swap' [3.96 GiB] inherit

ACTIVE '/dev/rhel/home' [30.98 GiB] inherit

ACTIVE '/dev/rhel/root' [63.46 GiB] inherit

[root@prod-srv-01 ~]# We are good now with all the devices as before.

[root@prod-srv-01 ~]# lvs -o +devices

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert Devices

home rhel -wi-ao---- 30.98g /dev/sda3(1015)

root rhel -wi-ao---- 63.46g /dev/sda3(8947)

swap rhel -wi-ao---- 3.96g /dev/sda3(0)

lv_data vg_data -wi-ao---- 25.00g /dev/sdb(0)

lv_data vg_data -wi-ao---- 25.00g /dev/sdc(0)

lv_u01 vg_data -wi-ao---- 25.00g /dev/sdd(0)

lv_u01 vg_data -wi-ao---- 25.00g /dev/sdc(1281)

[root@prod-srv-01 ~]#Mount and verify the fileSystems

Mount the logical volumes to respective mount points or run # mount -av if the FSTAB entries exist.

[root@prod-srv-01 ~]# mount /dev/mapper/vg_data-lv_u01 /u01/

[root@prod-srv-01 ~]#

[root@prod-srv-01 ~]# mount /dev/mapper/vg_data-lv_data /data/List the mount points and verify the size.

[root@prod-srv-01 ~]# df -hP /data/ /u01/

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_data-lv_data 25G 24G 1.2G 96% /data

/dev/mapper/vg_data-lv_u01 25G 6.4G 19G 26% /u01

[root@prod-srv-01 ~]#Check the data are intact

[root@prod-srv-01 ~]# ls -lthr /data/ /u01/

/data/:

total 19G

-rw-r--r--. 1 root root 2.0G Dec 13 23:57 web_mysql_db_backup.sql

drwxr-xr-x. 2 root root 86 Dec 13 23:58 backups

-rw-r--r--. 1 root root 5.0M Dec 14 00:00 backups.tar.gz

/u01/:

total 6.2G

-rw-r--r--. 1 root root 200M Dec 14 00:10 app.log

-rw-r--r--. 1 root root 20M Dec 14 00:10 oracle_error.log

-rw-r--r--. 1 root root 1.5G Dec 14 00:12 linux.x64_11gR2_database_1of2.zip

-rw-r--r--. 1 root root 1.0G Dec 14 00:12 linux.x64_11gR2_database_2of2.zip

-rw-r--r--. 1 root root 2.0G Dec 14 00:13 linuxamd64_12102_database_1of2.zip

-rw-r--r--. 1 root root 1.5G Dec 14 00:13 linuxamd64_12102_database_2of2.zip

[root@prod-srv-01 ~]# All looks good.

That’s it, we have successfully recovered from a failed disk or from a deleted physical volume scenario.

Conclusion

A similar way of how we recovered the logical volume will be followed in recovering a deleted physical volume. However, some steps are additionally required to restore the deleted Physical volume in Logical Volume Management, by adding new disks and pointing the new disk with the existing UUID. Subscribe to our newsletter and stay tuned for more troubleshooting guides.

Good Afternoon,

I understand what you have laid out. What I don’t understand is the last step. Surely the file system will be damaged considering a 20GB chunk of it has been replaced. I would have thought that fsck would need to be run over the file system and the files that were contained in that missing chunk be moved to lost+found.

Thanks

Mal